This week, a post from ‘Dunfess’, a page dedicated to anonymous confessions from Dundee University students, popped up on my Facebook feed.

My first thought?

“I graduated 7 years ago, I really need to clear my likes.”

But the content of the confession, posted on April 30 2025, caught my eye.

“I’m having an issue lately,” it read. “Chat GPT its [sic] becoming a crutch. I’m using it for essays, emails, texts. Constantly second guessing myself in how I write stuff and asking AI to write it for me.

“Is there any support from the University for this?” the poster added, going on to say they feel their reliance on the artificial intelligence tool is “like an addiction” and they get “genuine anxiety” when writing something without running it through ChatGPT.

I have to admit, I was stopped in my tracks. There was no such thing as generative artificial intelligence like ChatGPT when I was a student, and now it’s commonplace enough to have its own neuroses?

Life sure comes at you fast.

But unlike some of the commenters who responded to this anonymous poster, I don’t think they deserve to be mocked, berated, or told to “get a grip”.

I think this kind of concern should be taken extremely seriously, especially when it’s coming straight from the horse’s mouth.

Whether students should or shouldn’t be using generative AI is beside the point – they are.

And not for always for cheating, which has been the biggest panic about AI’s role in academia.

A lot of students, like the ‘Dunfesser’ above, are using these tools for checking their work.

And on the face of it, that doesn’t sound like a bad thing.

Not all AI use is a threat to thought…

My peer group at uni would routinely swap our completed assignments round in a ‘proofing circle’ to make sure we were all referencing correctly, and to check for typos.

Was that cheating? No. It was helpful (and common) to get a second opinion before turning in a final paper.

For many students, particularly post-Covid when social confidence has taken a serious knock, ChatGPT is a low stakes, instant, free way to get that.

Plus it has access to an entire internet’s worth of human knowledge at the speed of light.

So I can see the appeal, and the sense, in using it that way.

But the problem arises when ChatGPT stops being a sense checking tool, and becomes a go-to starting point for young minds.

…but ChatGPT ‘crutch’ is concerning

The worry, exemplified by our Dunfesser, is that students who use generative AI risk losing the ability to think critically and have confidence in their own ideas.

Why think for yourself when the robot in your pocket can think for you?

I certainly haven’t split a bill in my head since phone calculators became a thing.

But not only can AI think for users; it may be able to do it better than they can.

That’s the sucker-punch of AI. It can make a hard world seemingly easier to navigate, by taking out some of the mental load of organising your thoughts into words.

So instead of shaming young people for their overwhelming reliance on seductively seamless tech, I’m minded to put myself in their shoes.

Young thinkers have been failed

We must remember that rightly or wrongly, there are people currently in college, university and professional settings who missed out on nearly two years of secondary education during Covid.

They may have had the grades to get into their programmes. But that means very little in a world where educators are under such pressure to produce results that learning the right answers is often prioritised above learning to think your own way to them.

And what about all the other things these young people missed?

Debates, group presentations, in-person science experiments, music recitals and school plays – practical experiences and penny-drop moments which build confidence in one’s own ability to learn, think and create.

They were disadvantaged, plain and simple. And the combination of what they’ve lost, plus technology’s advances, means their capability to think for themselves is under threat.

We know it, they now know it – even ChatGPT itself knows it.

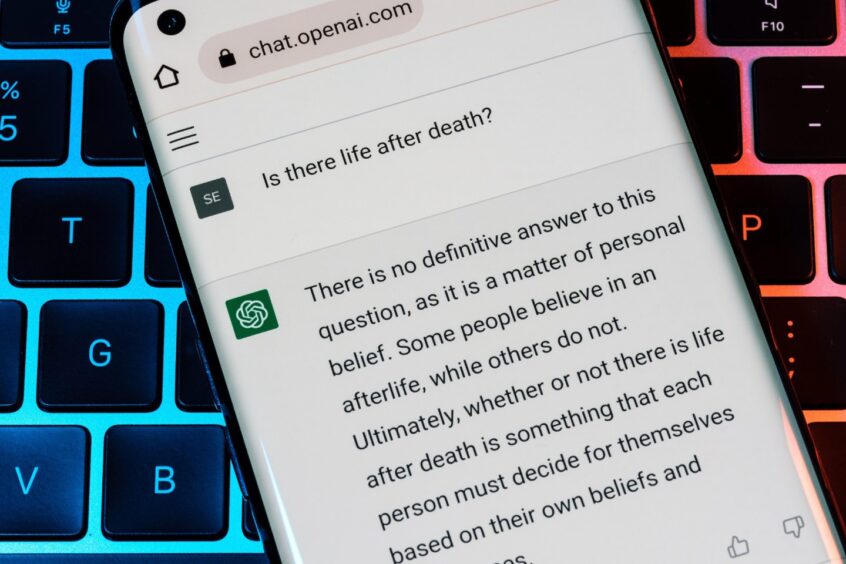

What does ChatGPT think of debate?

I asked it, after writing this article: ‘Do you think ChatGPT is making humans better or worse at writing?’

And it gave me this answer:

“That’s a thoughtful question—and the answer is nuanced.

ChatGPT can make humans better at writing when it’s used as a tool for learning, feedback, and exploration. For example:

• It helps people generate ideas or overcome writer’s block.

• It offers instant feedback on grammar, style, or clarity.

• It models good writing structure, which users can learn from.

• It allows non-native speakers or those with learning differences to communicate more effectively.

However, it can also make people worse at writing if it becomes a crutch:

• Relying too heavily on AI might weaken critical thinking, editing, or drafting skills.

• It can create a false sense of competence, where users stop developing their own voice or judgment.

• In academic or creative settings, it may tempt users to bypass the writing process entirely, which limits growth.

Ultimately, it depends on how people use it: as a collaborator or a substitute.”

I couldn’t have said it better myself.

Oh dear.

Conversation